Empowering Quoting and Program Execution for Automotive Suppliers - Part 2 of 3

/This article is part 2 of a 3-part series. If you haven’t read part 1 yet, here’s a link.

Creating A Foundation of Success

Many automotive suppliers are heavily invested in legacy point solutions to support activities across the enterprise. While these point solutions usually work well at performing a specific function, they often create silos that impede coordination. Activities like a simple quote revision or routine APQP corrective action can be cumbersome and error-prone. These islands of data create a range of problems that can be broadly grouped across three categories: data, process, and people.

- 1) Data – Data related challenges can manifest as duplicated information, a lack of reusable data, and limited access to timely information

- 2) Process – Controlling change is critical in complex and fast changing environments. Ensuring business rules and workflows are adhered-to helps avoid corrective actions, minimizes waste and scrap, and encourages multidisciplinary decision making.

- 3) People – how often are emails used to send sensitive OEM requirements? Balancing data security with the need for effective collaboration so OEM data is not compromised is critical. How can all the stakeholders contribute to winning business and executing?

Creating a foundation for success requires that the challenges related to data, process, and people are addressed. But before diving into these issues, it’s important to understand an underlying principle that will ensure success when tackling these three issues.

A Platform-Based Approach

How should automotive suppliers respond? Many companies have tried replacing or updating legacy systems but, all too often, the process is disruptive to end users and costs are prohibitive. Adding another one-off solution or connector between silos usually doesn’t work either and only worsens the problem. In actuality, most suppliers already have the right tools and solutions in place!

These tools, however, lack the underlying “connectivity” to keep the data, processes, and people in sync. Improving connectivity is the central value that informs a platform-based technology strategy. The preoccupation with improving, fixing, adding, removing, or consolidating a specific solution is a secondary concern.

“Improving overall connectivity is the primary concern. The common preoccupation with improving, adding, removing, consolidating, or upgrading a legacy solution is a secondary concern.”

CIMdata, an analyst organization specializing in the product lifecycle management industry, recently commented on these silos and suggested, “…companies need a platform and technology that can fill the large process gaps by managing workflows and capturing data while connecting and coordinating existing data silos.” They further assert, “Companies get stuck with their legacy systems and solutions, which in turn impairs their abilities to respond to these challenges.”

Next-Generation Product Lifecycle Management Platforms

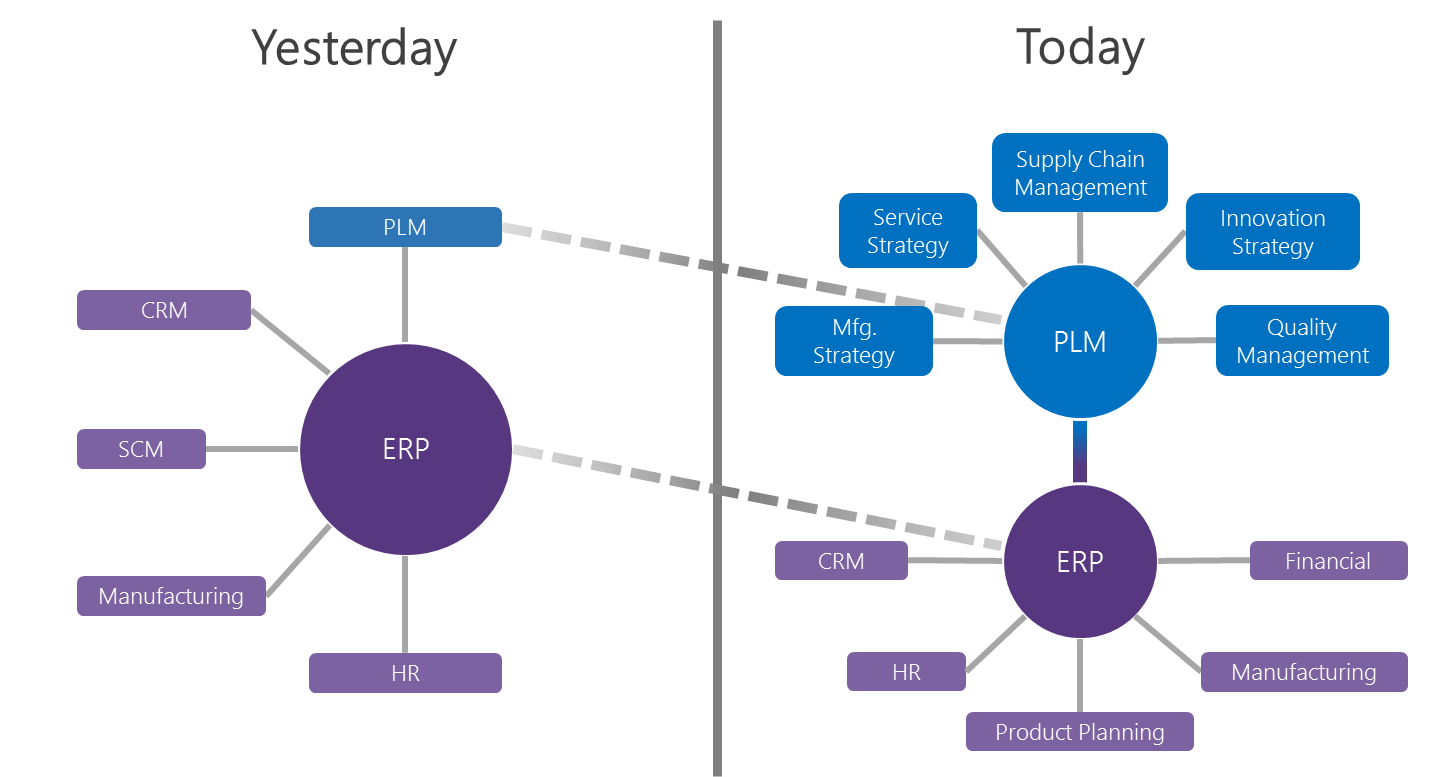

Today, the leading automotive suppliers and industrial manufactures are looking to product lifecycle management (PLM) to fuel platform-based digital transformation. Modern PLM has come a long way from its origins in engineering and product data management. Figure 4 illustrates a high-level model of how next-generation PLM solutions are playing a role in connecting the enterprise.

Solutions and applications that operate against a PLM platform are claiming an ever-increasing slice of the enterprise software mix and it isn’t because PLM is replacing existing solutions but rather connecting the organization’s technology silos. Figure 5 looks at this trend from a different angel and highlights the horizontal nature of PLM’s growth from engineering in the 1990s into sales, manufacturing, and even services/support today.

John Sperling, the PLM product manager at Aras Innovator, was an early advocate of this platform-based approach and articulated it well during a 2012 industry event saying “… tomorrow’s [PLM] solutions will be platforms, not stand along applications. These platforms must provide the building blocks for applications and the connective frameworks that synchronize a company’s data and processes.” He goes on to say, “For a long time, the idea of digital transformation seemed unattainable, too disruptive, and expensive. [A] PLM platform, however, is the ideal approach to incremental and low risk digital transformation. The era of monolithic, rip and replace implementations is over.”

Figure 4 - The Evolving Role of PLM in the Enterprise Software Mix

These platforms must provide the building blocks for applications and the connective frameworks that synchronize a company’s data and processes.” He goes on to say, “For a long time, the idea of digital transformation seemed unattainable, too disruptive, and expensive. [A] PLM platform, however, is the ideal approach to incremental and low risk digital transformation. The era of monolithic, rip and replace implementations is over.”

Figure 5 – Comparison of Enterprise Software Usage for Industrial Manufactures Across the Product Lifecycle

The high-level architecture of a modern PLM platform is illustrated by Figure 6. The platform is comprised of three components while the core framework, functionality, and business solutions operate between the data integrated applications. In contrast, legacy PLM solutions have a product data management (PDM) paradigm where the data and integrated application run as a monolithic service. “Connectivity” is often an afterthought. Next-generation PLM platforms are designed from the ground-up to enhance connectivity while support the ecosystem and building blocks for strategic applications such as quality management, APQP, and NPI for example.

Figure 6 - High-Level Architecture of a Modern PLM Platform

It’s finally possible to synchronize the data, processes, and people across the organization. And in turn creating a "single source of truth” for product information, establishing repeatable processes, and supporting real-time collaboration. To win business and effectively execute programs on time and within budget it is critical that automotive supplier consider the benefits of a PLM platform.

Data Cohesion

Flexible data models are central to platform-based PLM. Figure 7 illustrates how data and item connectivity would be modeled in industrial manufacturing or automotive supplier environment. Each circle and rectangle represents information silos while lines represent relationships supported by the PLM platform. Connecting these silos promotes better management, visibility, and data reuse.

Figure 7 - Data and Item Connectivity Within a PLM Platform

Given that these data sources are connected and related, users and business applications can traverse the relationships and access data that corresponds to a program, quote, part, etc.

In this example, the part/assembly is exploded to highlight its attributes such as purchase costs, required tools, and labor. These attributes are single instances and can be accessed by other items through their connected relationship. Thus, various calculations and analyses can readily be achieved such as the impact of change on a quote, labor requirements, etc. The exploded view of manufacturing process planning (MPP) illustrates how it’s definition and properties can be referenced from the part/assemble, among others. This referenced-based definition would be true of any application running on top of a cohesive data model – such as APQP, change management, quotation, etc.

Benefits

A cohesive data model helps minimize data reentry, creates robust where-used relationships, and lays the ground work for better workflow and process management. This means less data entry mistakes and duplication, improve searching, and processes that operate with confidence against a single instance. But, perhaps the most important benefit is the ability to reuse data.

To illustrate the possibilities of data reuse, advanced product quality planning (APQP) is a perfect place to start. The very nature of the APQP process is to collect, analyze and assemble a broad range of data. At a high level, the diagram below shows how data elements can be referenced and reused at different phases in the process.

FIGURE 8 - APQP Data Reuse Diagram

Hence, maintaining selected data items in one place and then referencing them during other phases, drives significant efficiencies, eliminates duplication error, and improves visibility. To extend this concept further, the properties and items defining this product, such as CAD files, for example, can be referenced by any number of other programs. Once this data model is in place, maintaining a true “digital twin” is not only feasible but relatively easy

Process Repeatability

Once silos are connected and a flexible data model is in place, processes (workflows) are needed to effectively manage change and business rules.

Repeatable Flexibility

Repeatability and flexibility might sound like opposing qualities, but in competitive, fast-paced industries, repeatability is a function of flexibility. In the long-run, suppliers with ridged workflows will simply be out-maneuvered by the competition – and you can’t repeat a process if you’re out of business. Even in the short-term, no single program, quote, or corrective action is ever the same. There are always small deviations for the formal process. Many manufactures dismiss these deviations as unmanageable chaotic variation. Dismissing this variation erodes efficiency, profitability, and the ability to ensure repeatability in the long-run. How can repeatable flexibility be achieved?

“… in competitive, fast-paced industries, repeatability drives flexibility.”

Just as a cohesive data model encourages data reuse, a modern workflow framework allows for repeatable flexibility. To achieve this, a workflow modeling framework must have the following qualities:

- seamless integration into the PLM Platform

- access to reference items/data

- extensive configurability

Leading PLM platforms have workflow modeling frameworks that allow users to configure robust workflows from scratch or leverage pre-defined workflows. Aras Innovator, for example, has templates for the product part approval process (PPAP), engineering change notices (ECN), and even sub-workflows that operate against the APQP process. These ready-to-use templates can be tailored or applied as-is.

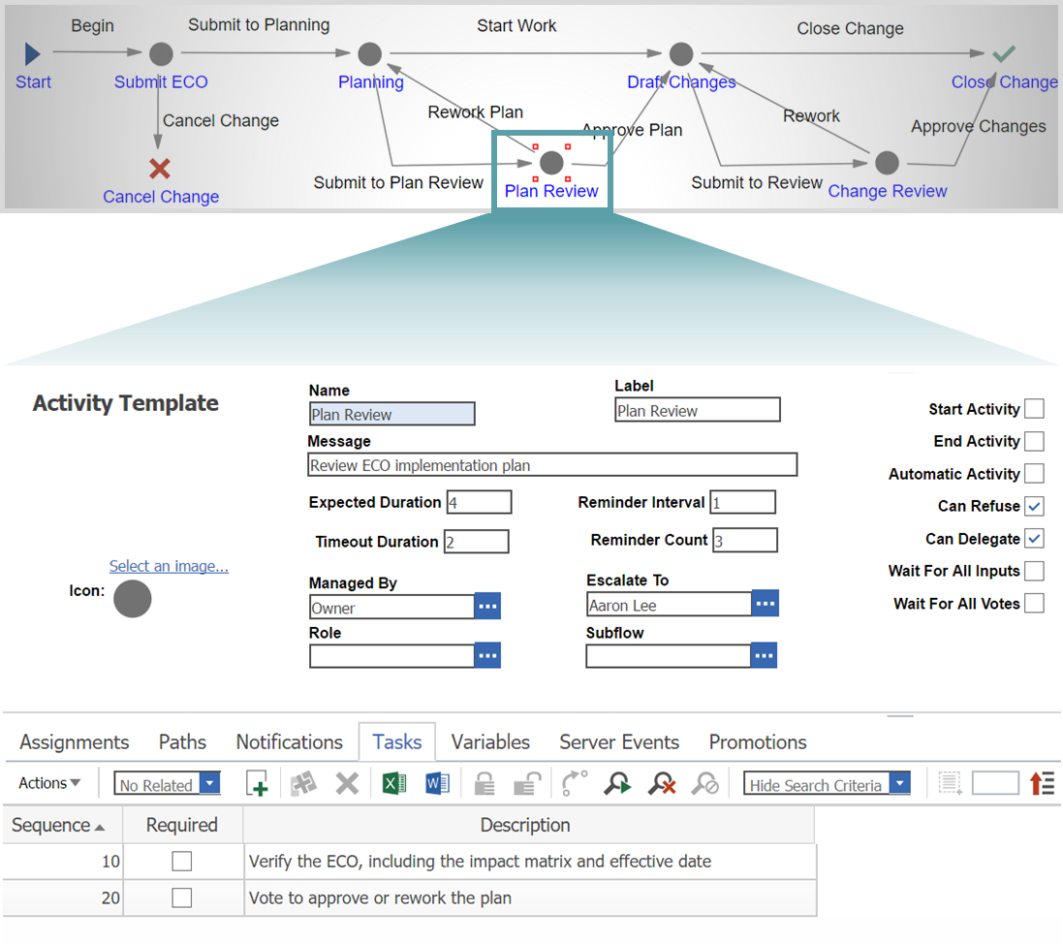

While templates are a great “business accelerators,” configurability is critical. This is the only way suppliers can manage and control for the unique variation in each quote, project, change, etc., while still adhering to the core business rules of each. Figure 9 highlights a modified engineering change order (ECO) inside of Aras Innovator.

The first section is a graphical representation of the workflow and becomes a powerful drag-and-drop workflow editor when in edit mode. Between the start and end points, the diagram is comprised of nodes (Activities) and connectors (Paths) which each have properties and attributes that define how the workflow behaves.

FIGURE 9 – Configurable Workflow (Images provided courtesy of Aras Corp)

Below the diagram, the “Plan Review” activity’s properties are exploded. The configurable range of possible behavior is unprecedented. These type of next-generation workflow configurators can manage virtually any process across the enterprise - everything from escalation rules and sign offs to notifications and sub workflow.

While this type of functionality is best-in-class, it is important to remember that true business value is driven by how well the workflow framework is connected to the broader PLM platform and subsequent data and items. Some of the properties in Figure 9 are simple such as activity duration and reminder interval. But other more critical fields may need to, as an example, reference the actual part the ECO is operating against, people responsible for each activity, and ensuing engineering design requests (EDRs). It is critical an underlying PLM platform orchestrates this interconnected behavior and maintains the digital thread.

Stay Tuned

In the next Practical PLM blog post we’ll finish this section by looking at the third factor - people - in the data, process, people mix. Then we'll explore how product lifecycle management (PLM) can support the day-to-day activities surrounding APQP, PPAPS, and quoting.